Anyone tracking AI video development has likely noticed the conversations around OpenAI Sora 2. This model and its companion application promise to advance text-to-video capabilities beyond basic clip generation, focusing on physical realism, improved steerability, and audio-visual synchronization. Access remains limited as of October 2025, and many technical specifications haven’t been published publicly. This analysis examines documented features, sets realistic expectations for early users, and positions Sora 2 against competing solutions.

Breaking Down Sora 2’s Core Improvements

OpenAI’s official materials frame Sora 2 as a substantial advancement in realism and control compared to previous iterations. The 2025 overview titled “Sora 2 is here” from the OpenAI team highlights expanded stylistic capabilities, enhanced physical accuracy—particularly in motion and object interactions—and integrated synchronized audio generation. The 2025 Sora 2 System Card expands on these priorities, emphasizing physics fidelity, steerability for guiding outputs, and maintaining continuity across multiple shots.

Translation for practical use: Sora 2 aims to generate convincing short videos quickly from text prompts, now with audio matched to visuals, while offering creators more precise control over camera movements, subject behavior, and scene development. OpenAI emphasizes built-in provenance and watermarking for all outputs, addressing concerns about content authenticity on social platforms.

Getting Access to Sora 2 Right Now

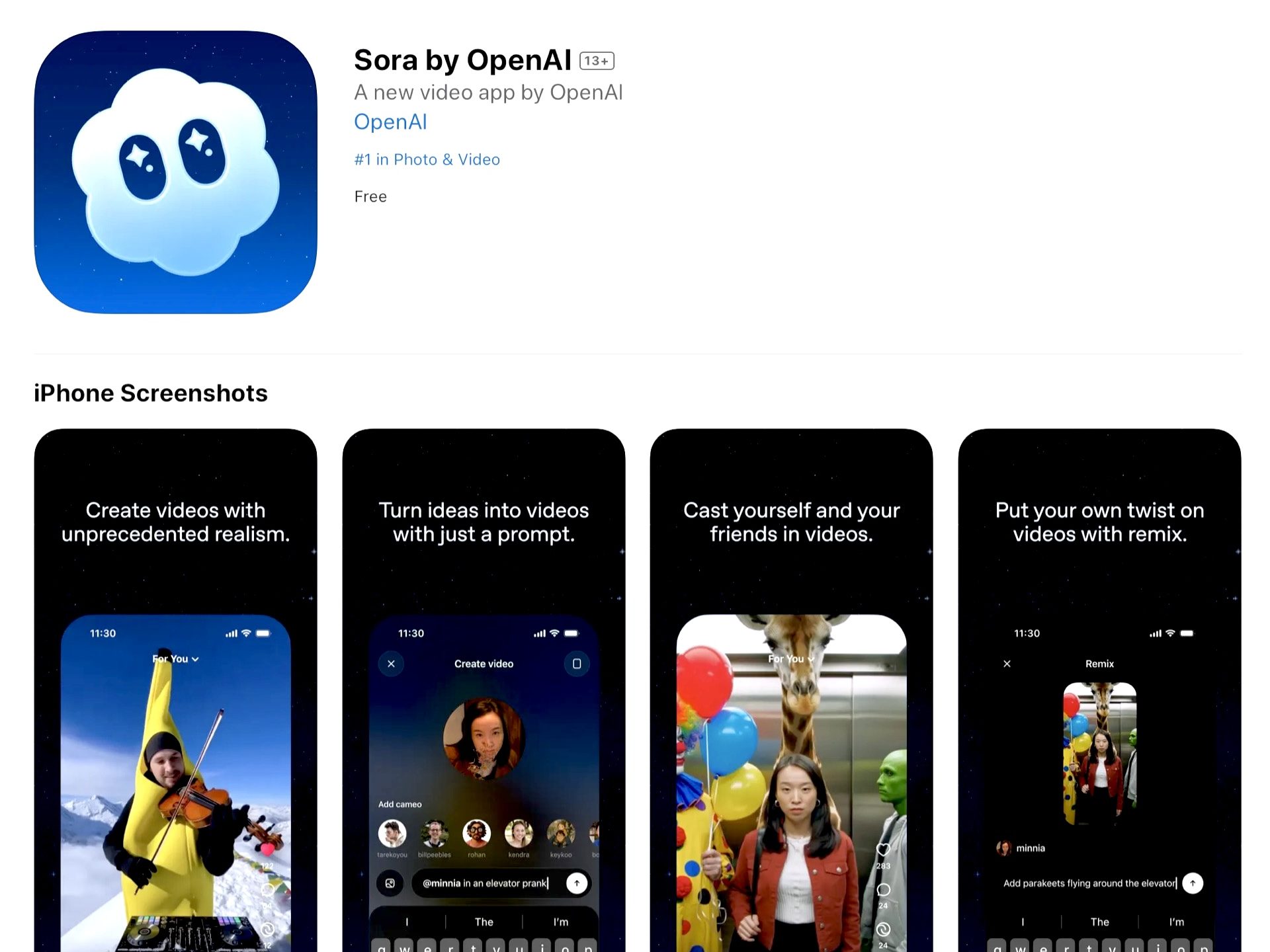

The rollout strategy continues to evolve. Reputable technology coverage from late September 2025 confirmed that OpenAI launched a dedicated Sora application featuring a social feed and “cameos” functionality for inserting user likenesses. The initial deployment followed an invite-only approach on iOS within the United States and Canada. TechCrunch’s September 30, 2025 report “OpenAI is launching the Sora app alongside the Sora 2 model” documented these details. OpenAI’s internal materials reference web-based access and future API availability, though comprehensive public API documentation doesn’t exist yet.

Those waiting for access should prepare prompts and shot sequences in advance to make the most of early testing opportunities. Structured preparation around cinematography cues, physics considerations, and scene composition will accelerate your learning curve once you gain entry.

Understanding Safety Guardrails and Content Provenance

OpenAI explicitly details multiple layers of safeguards and provenance signals for Sora-generated content. The 2025 article “Launching Sora responsibly” outlines multimodal moderation applied to both inputs and outputs, strengthened protections concerning minors, and initial restrictions on uploading photorealistic human likenesses. The company specifies that every generated video includes both visible and invisible provenance markers—a visible watermark at launch plus embedded C2PA metadata.

This matters significantly for creators publishing to social networks: it enables audiences to identify AI-generated material and supports broader content authenticity standards. Anyone producing sensitive or realistic content should align their workflow with OpenAI’s Usage Policies (2025) and the design principles outlined in “Sora feed philosophy” (2025).

The Reality of Unknown Technical Specifications

Here’s a significant consideration for early adopters: numerous specifications remain unpublished. As of October 2025, OpenAI’s public documentation doesn’t specify maximum clip duration, output resolution, frame rate, or rate limits for Sora 2. This means any third-party claims about duration should be treated as individual observations rather than confirmed specifications. Documentation updates will change this landscape, but current planning must account for provisional constraints subject to modification.

Workflows dependent on exact durations—advertising slots or platform-specific formats, for example—require flexible contingency plans. Generating conceptual clips in Sora 2 and refining them in professional editing software often proves more efficient than attempting perfect final renders directly from the model.

Where This Technology Fits Into Real Production Work

Concept visualization: When rapid ideation matters, Sora 2’s emphasis on realism and synchronized audio helps visualize shots and establish tone without full production resources.

Short-form social content: For teasers, explainer videos, and atmospheric pieces, short clips with accurate physics and audio sync deliver genuine value. Early users report strong performance in straightforward, well-defined scenarios.

Educational and marketing prototypes: Testing visual narratives or product visualizations before committing to filming becomes more accessible.

Practical approach: Structure your prompt like a detailed brief. Specify camera angles, lighting conditions, subject movements, and physical interactions. Include audio intentions—dialogue timing, ambient sound—to help the model coordinate visuals and sound effectively.

How Sora 2 Measures Up Against Competitors

The AI video generation market moves rapidly. Objective evaluation requires consistent criteria across platforms: realism and physics quality, audio synchronization, control and consistency capabilities, duration and resolution specifications, safety and provenance features, accessibility, and practical value.

Runway Gen-4: Runway prioritizes consistent characters and locations, realistic motion, and what they term “world understanding.” Notably, Runway publishes concrete video specifications in their help documentation, including supported durations of 5 or 10 seconds at 24 frames per second across multiple aspect ratios. The 2025 help documentation “Creating with Gen-4 Video” provides these details. For projects requiring predictable clip lengths and aspect ratios immediately, this transparency offers a tangible advantage.

Google Veo: Google’s Veo model targets sophisticated video generation and reaches users through specific Google channels—the Gemini app for certain subscribers, Vertex AI, and selected creative tools. DeepMind’s Veo page (2025) provides an official capability overview. Similar to Sora 2, detailed public specifications for duration and resolution remain limited; verification within your account before production commitments becomes essential.

Luma Dream Machine and Pika: Both platforms deliver accessible web experiences with regular model improvements. However, their public documentation currently provides minimal concrete specifications regarding duration, resolution, or audio capabilities. Testing within each platform to confirm constraints before production planning proves necessary.

A Framework for Evaluating AI Video Tools

Rather than assigning premature numeric ratings, this framework enables fair evaluation during hands-on testing:

Realism & physics fidelity (25%): Do materials, movements, and interactions appear convincing without obvious visual artifacts? Early demonstrations and OpenAI’s positioning emphasize this strength, but verification across your specific scene types remains essential.

Audio quality & synchronization (15%): Are lip movements and sound effects timed believably? OpenAI indicates synchronized audio capability; confirmation testing should include dialogue sequences and percussive audio elements.

Steerability & multi-shot consistency (20%): Can you effectively guide camera movements and maintain subject continuity across multiple shots? OpenAI materials stress improved steerability; consistency across shots represents an ongoing industry challenge.

Output constraints—duration, resolution, rate limits (10%): Are limitations clearly documented and sufficient for your platform requirements? Mark this criterion as “Insufficient data” where official specifications don’t yet exist.

Safety & provenance measures (10%): Are watermarking and metadata present and functional? OpenAI specifies visible and invisible provenance at launch; confirm these features appear in your exported files.

Accessibility & ecosystem—app, API roadmap (10%): How straightforward is initial access and potential integration? Sora’s invite-only application and developing API timeline may create adoption delays for some teams.

Practical value relative to constraints (10%): Does Sora 2 meaningfully accelerate ideation and short-form production today given current access limitations?

Document observations against each criterion during your initial week with Sora 2. This approach maintains grounded, comparable evaluations across different tools.

Making the Decision to Adopt Now or Wait

Content creators and social media teams: If you already work with AI video tools, early access offers advantages—particularly for conceptual work and teasers. Plan for prototyping rather than immediate final production until specifications and rate limits gain clarity.

Educators and marketing professionals: Sora 2 shows promise for lesson visualizations, product explainers, and mood boarding. Approach it as an ideation accelerator while maintaining traditional editing tools for polish and compliance requirements.

Agencies and enterprise teams: Consider waiting for broader access and policy clarification if your deliverables demand fixed durations, specific resolutions, or rigorous brand safety protocols.

For those building comprehensive creative toolsets, a hybrid strategy works well: generate concepts in Sora 2 or a competitor, then finalize in professional editing software. This approach hedges against evolving constraints and moderation policies.

Important Considerations for Early Testing

Keep specifications flexible until OpenAI publishes concrete details about clip lengths, resolution, and frame rates—deliverables should accommodate potential changes.

Respect policy boundaries by reviewing OpenAI’s 2025 guidance in “Launching Sora responsibly” and the Usage Policies. Expect moderation on certain prompt types and uploads.

Track the rollout progression: TechCrunch’s 2025 coverage of the iOS application and invite system indicates staged expansion. Those requiring API access should monitor official developer documentation.

Document your testing systematically: Use consistent prompts, note iteration requirements, and record results against the evaluation framework above. This enables objective comparison between Sora 2 and alternatives like Runway and Veo over time.

Where Sora 2 Stands Today

Sora 2 represents meaningful progress toward accessible AI-generated video content: enhanced realism, audio-visual synchronization, and provenance built into the design. The primary current limitation involves access restrictions and unpublished technical specifications. Approaching it as an ideation tool for short clips—rather than expecting a complete production solution—allows you to extract genuine value while the ecosystem develops.

When selecting the right tool for specific projects, weigh the appeal of cutting-edge realism against the practical value of published specifications—an area where competitors like Runway currently hold an advantage. As public documentation expands and more creators conduct reproducible testing, the landscape will come into sharper focus.

Post a comment