Adobe showcased revolutionary experimental artificial intelligence technologies at its annual MAX Sneaks event in Los Angeles this week, unveiling prototype tools that could fundamentally transform how creative professionals edit video, manipulate lighting, and design digital content. The October 29 demonstration, hosted by comedian Jessica Williams before an audience of over 10,000 attendees, provided a glimpse into Adobe’s research and development pipeline.

Frame-by-Frame Editing Becomes One-Click Process

The standout demonstration was Project Frame Forward—an AI-powered system that applies edits to an entire video clip based on changes made to a single frame. During the live demo, researchers showed how removing a speeding car from one frame of drone footage automatically eliminated the vehicle from the entire sequence, including its smoke trail and shadows.

“Imagine if editing video was as simple as editing an image,” said Adobe’s Paul Trani, introducing the technology. The system works by having users edit one frame in Photoshop, then AI propagates those changes throughout the entire video clip without requiring manual tracking or masking.

In another demonstration, researchers added a puddle to a single frame of video showing a walking cat. The AI not only propagated the puddle across the entire video sequence but automatically generated accurate reflections of the cat as it moved, updating the reflection’s position and angle throughout the clip.

This capability addresses one of video editing’s most tedious challenges—maintaining consistency across frames when making alterations. Traditional workflows require frame-by-frame adjustments or complex motion tracking that consumes hours of editor time. Frame Forward collapses this into a single edit that AI extrapolates across temporal dimensions.

Lighting Control Redefines Post-Production Capabilities

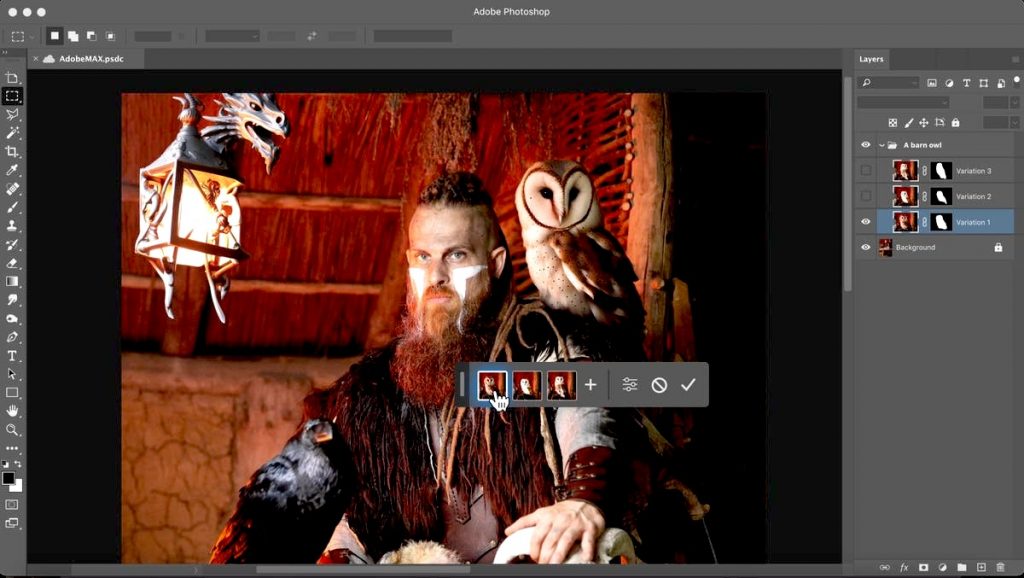

Project Light Touch emerged as another crowd favorite, generating some of the evening’s most enthusiastic reactions. This generative AI tool allows photographers to adjust lighting on images after capture, effectively providing post-production control over light sources, their intensity, and direction.

The technology demonstrated ability to transform day into night, add dramatic lighting at specific angles, and even correct common portrait photography issues like uneven illumination caused by hats or other obstructions. Most impressively, the tool’s “Spatial Lighting Mode” lets users move light sources around a 3D representation of the scene, with software automatically adjusting shadows, reflections, and ambient lighting accordingly.

This represents a fundamental shift in photography workflow. Traditionally, lighting decisions made during capture are largely fixed—photographers can brighten or darken images, but repositioning light sources requires reshooting. Light Touch decouples lighting from the moment of capture, enabling photographers to experiment with illumination scenarios that never existed in the original scene.

The 3D spatial manipulation particularly impresses by demonstrating understanding of scene geometry. The system doesn’t just paste new lighting—it comprehends surface orientations, material properties, and how light interacts with the environment to generate physically plausible results.

Advanced Object Removal and Surface Manipulation

Project Trace Erase represents Adobe’s next-generation approach to object removal, moving beyond simple content-aware fill to understand and eliminate cascading effects of removed objects. When demonstrating lamp removal from a room, the tool automatically eliminated not just the lamp itself, but the warm glow it cast on surrounding walls and its reflection in windows.

Project Surface Swap showcased AI-powered texture recognition that can select and replace materials while preserving original lighting and perspective. The technology demonstrated seamless replacement of car paint colors, furniture upholstery, and flooring with a single click, automatically handling complex reflections and surface properties.

These tools tackle challenges that traditionally require painstaking manual work. Removing objects convincingly means addressing every trace of their presence—shadows they cast, light they reflected, atmospheric effects they created. Surface swapping that looks natural requires understanding material properties, how they interact with lighting, and maintaining perspective consistency. Automating these considerations through AI represents significant productivity gains.

Industry Context and Commercial Implementation

These experimental tools arrive as creative professionals face mounting pressure to produce more content faster. Estée Lauder’s Too Faced brand recently used Adobe’s AI tools to create its first fully AI-generated advertising campaign, reducing a typical four-day editing process to one day.

Adobe’s research follows a rigorous selection process, with approximately 200 project proposals narrowed to the small number showcased at Sneaks. While not all experimental technologies reach commercial products, many previous Sneaks demonstrations have eventually been integrated into Adobe Creative Cloud applications, including this year’s Harmonize tool for Photoshop, which was announced at the 2024 event.

The path from Sneaks prototype to shipping product varies considerably. Some demonstrations represent early-stage research exploring technical feasibility, while others arrive nearly product-ready. Adobe uses Sneaks both to gauge user interest and identify which experimental directions justify additional investment toward commercialization.

Technical Challenges in AI Video Processing

The technologies demonstrated address genuinely difficult problems in computer vision and video processing. Propagating edits across video frames while maintaining temporal consistency requires understanding object motion, occlusion handling when objects move behind others, and preserving visual coherence despite frame-to-frame variations.

Lighting manipulation that appears realistic demands understanding of scene geometry, material properties, and physically-based rendering principles. The AI must essentially reverse-engineer how light interacted with the scene, then synthesize new lighting scenarios that respect physical constraints.

Object removal with cascading effect elimination requires segmenting not just the object but identifying all secondary and tertiary effects—reflected light, cast shadows, atmospheric scattering, reflections in surfaces. Each of these presents distinct technical challenges that AI must address simultaneously.

Implications for Creative Workflows

If these tools reach production, they could dramatically reshape video editing and photography workflows. Tasks currently requiring hours of specialized skill could become accessible to broader user bases through AI-assisted simplification.

However, this accessibility raises questions about creative value and employment in production industries. When sophisticated edits become one-click operations, does the creative work shift entirely to ideation and direction? Do technical skills that currently command premium rates lose economic value?

The tools also introduce new creative possibilities that weren’t practically achievable before. If adding complex reflections or repositioning lighting becomes trivial, creators can experiment with scenarios previously limited by technical constraints or budget. This democratization of advanced techniques could enable new forms of creative expression.

Timeline and Availability

Adobe provided no specific timeline for when or if these experimental technologies will reach commercial products. The Sneaks format explicitly presents research prototypes without promises of productization, allowing the company to explore ambitious directions without commitment.

Users interested in these capabilities should monitor Adobe Creative Cloud updates, as successful Sneaks demonstrations often appear in products within one to two years. However, some technologies remain perpetually experimental due to technical limitations, computational costs, or unclear market fit.

The MAX conference typically includes announcements of shipping features alongside Sneaks prototypes, helping distinguish near-term product updates from longer-term research directions. This year’s event demonstrated Adobe’s continued investment in AI-powered creative tools, whether or not these specific prototypes ever reach users’ hands.

Post a comment